MineRL : Training AI agents in Open World Environments

Yonsei University

MineRL : Training AI agents in Open World Environments

Though deep reinforcement learning has led to breakthroughs in many difficult domains, these successes have required an ever-increasing number of samples. As state-ofthe-art reinforcement learning (RL) systems require an exponentially increasing number of samples, their development is restricted to a continually shrinking segment of the AI community. Likewise, many of these systemss cannot be applied to real-world problems, where environment samples are expensive. Resolution of these limitations requires new, sample-efficient methods. To facilitate research in this direction, we propose the MineRL Competition on Sample Efficient Reinforcement Learning using Human Priors [1].

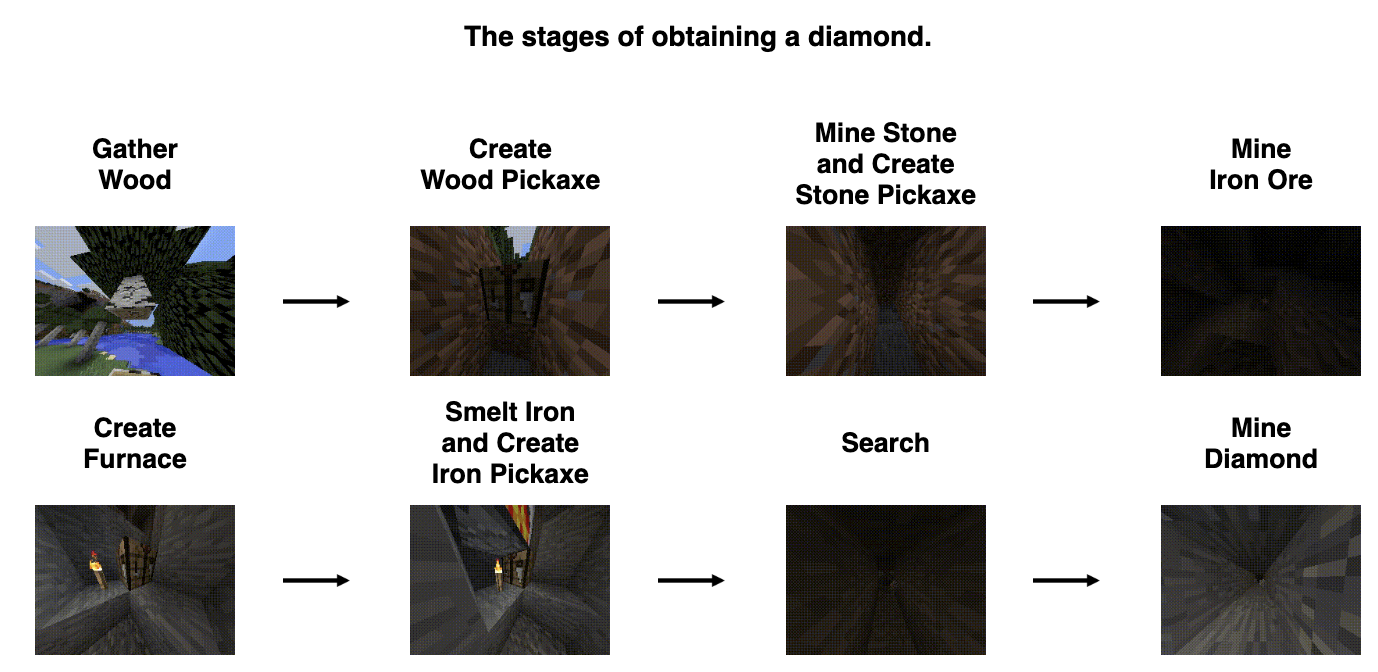

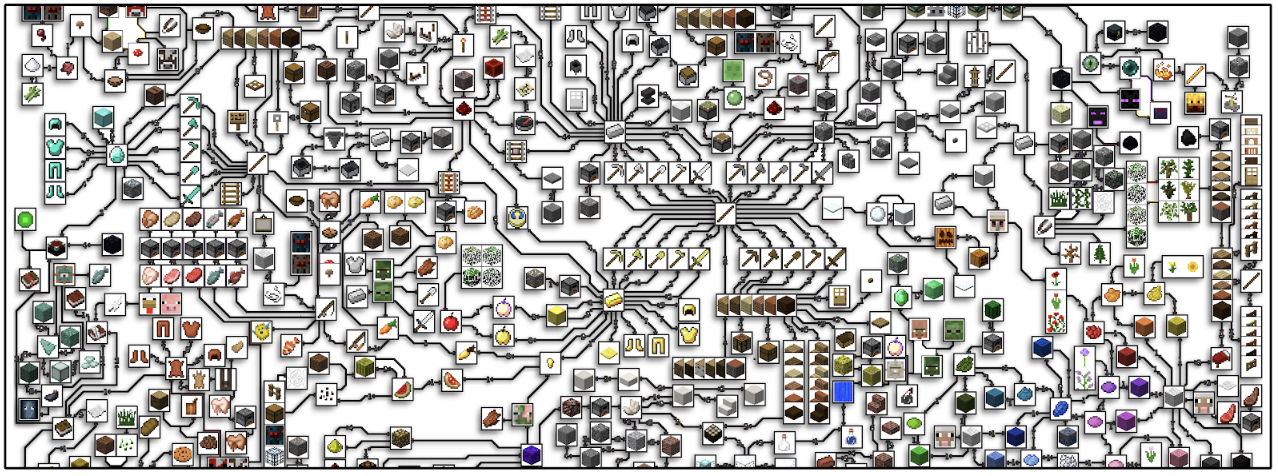

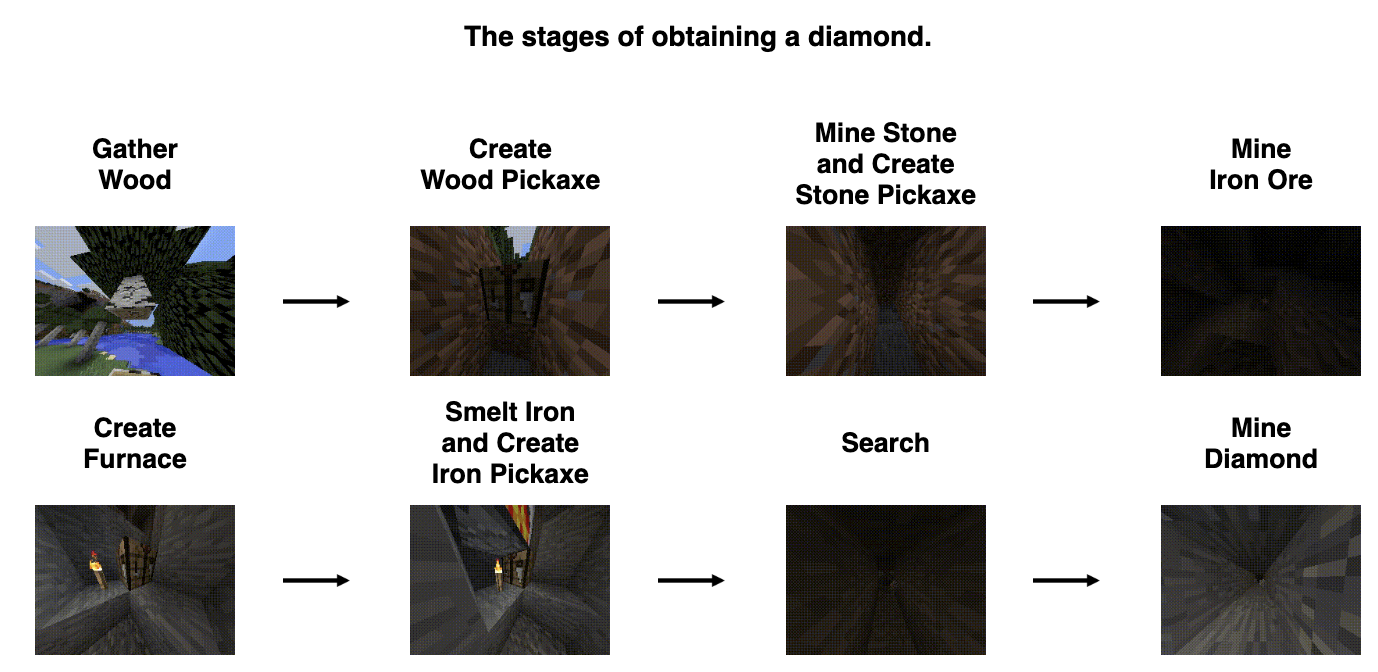

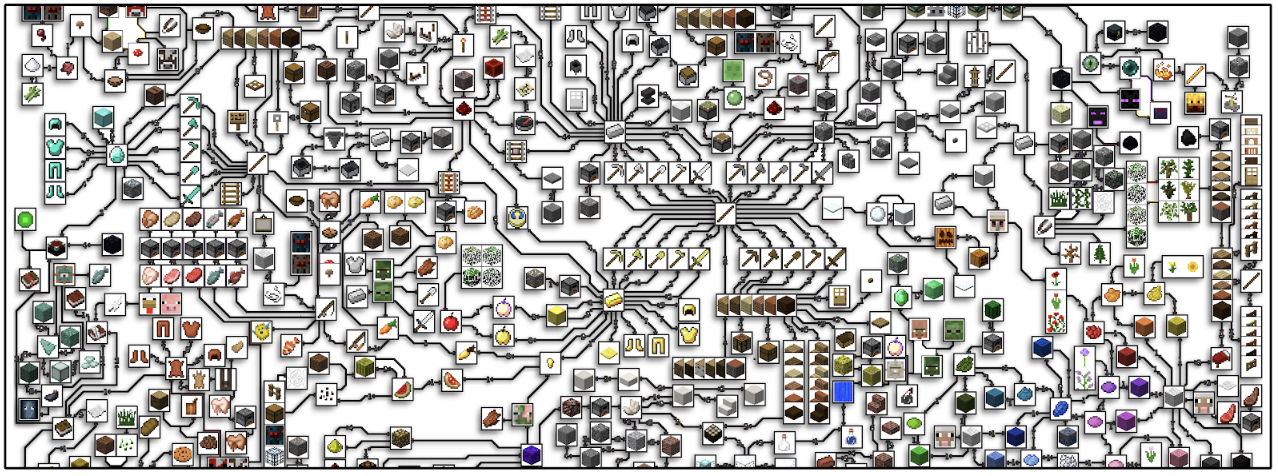

The task of the competition is solving the MineRLObtainDiamond-v0 environment. In this environment, the agent begins in a random starting location without any items, and is tasked with obtaining a diamond. This task can only be accomplished by navigating the complex item hierarchy of Minecraft.

The agent receives a high reward for obtaining a diamond as well as smaller, auxiliary rewards for obtaining prerequisite items. In addition to the main environment, we provide a number of auxiliary environments. These consist of tasks which are either subtasks of ObtainDiamond or other tasks within Minecraft.

A large-scale collection of over 60 million frames of human demonstrations were used, to utilized expert trajectories to minimize the algorithm’s interactions with the Minecraft simulator.

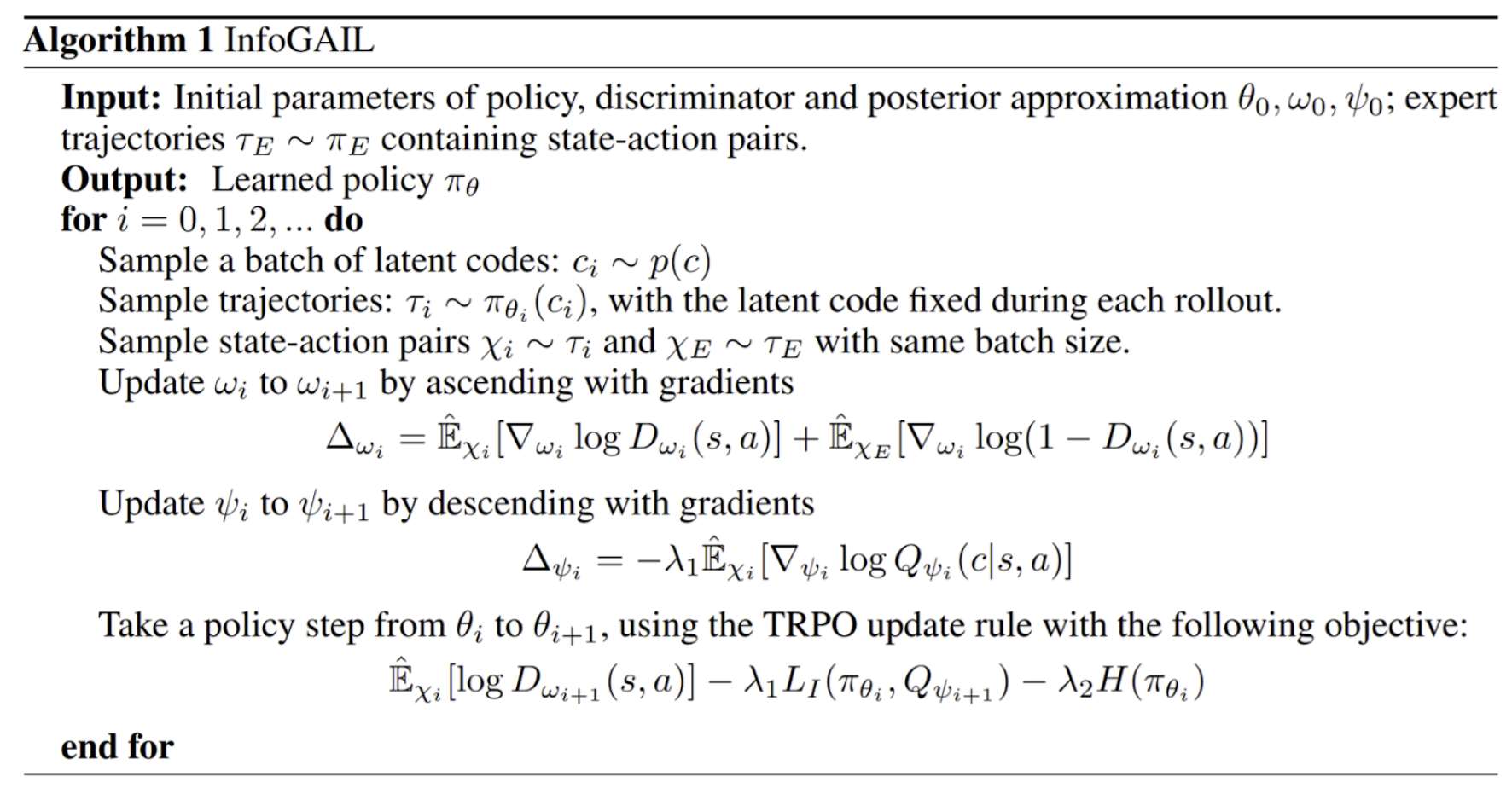

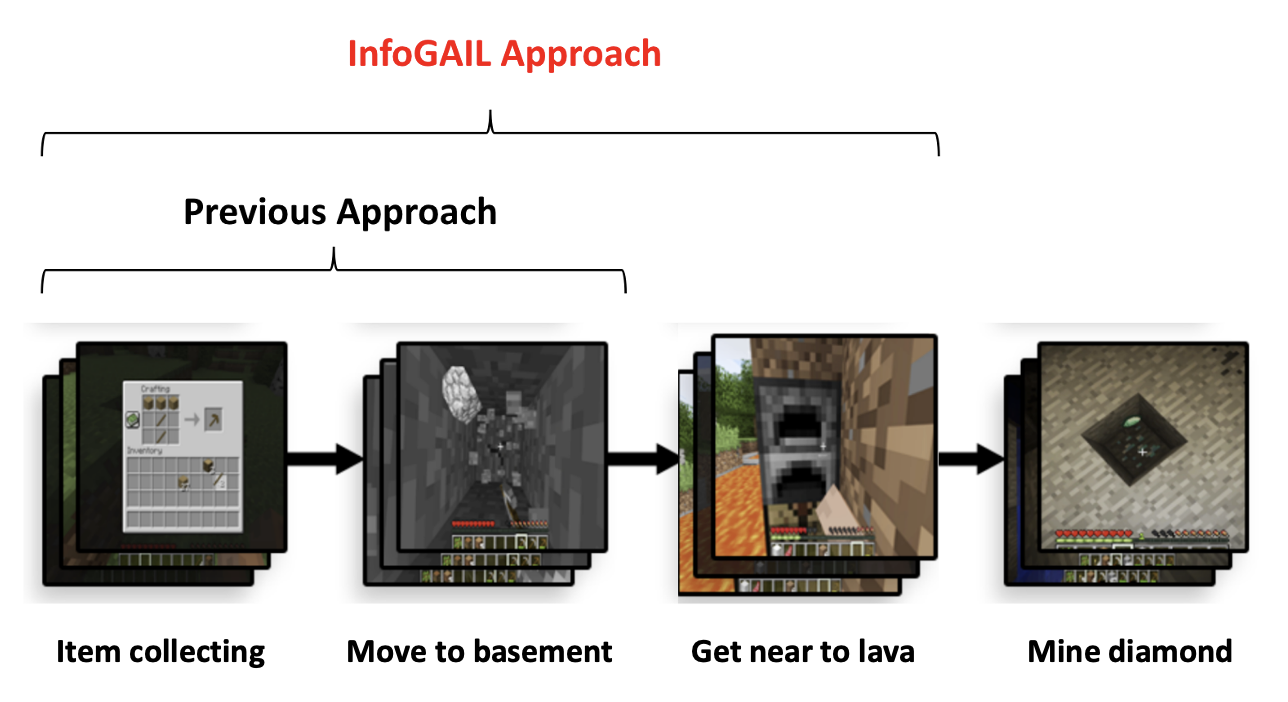

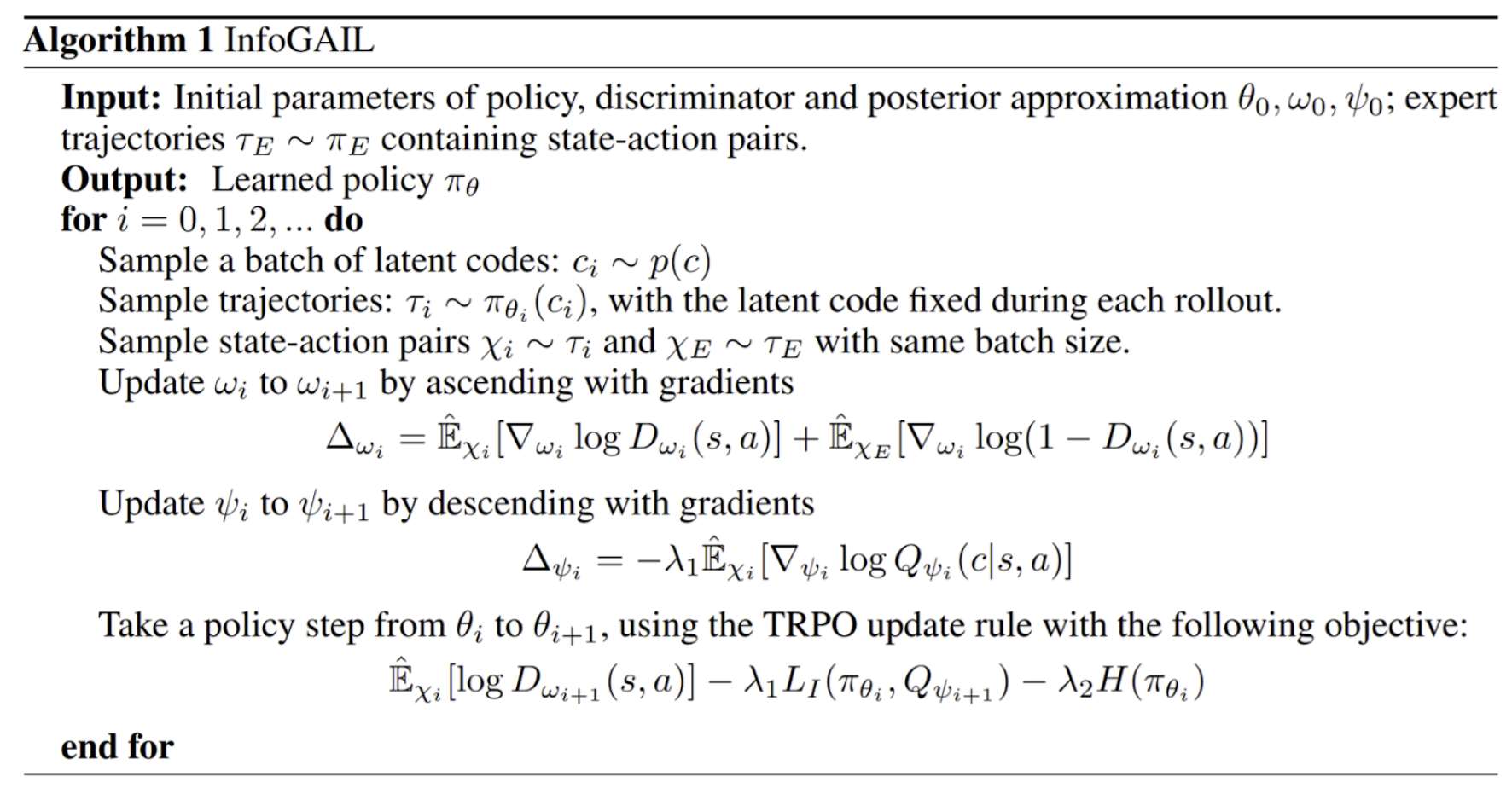

The goal of imitation learning is to mimic expert behavior without access to an explicit reward signal. Expert demonstrations provided by humans, however, often show significant variability due to latent factors that are typically not explicitly modeled [2]. In this work, we utilized InfoGAIL algorithm in MineRL task to infer the latent structure of expert demonstrations in an unsupervised way.

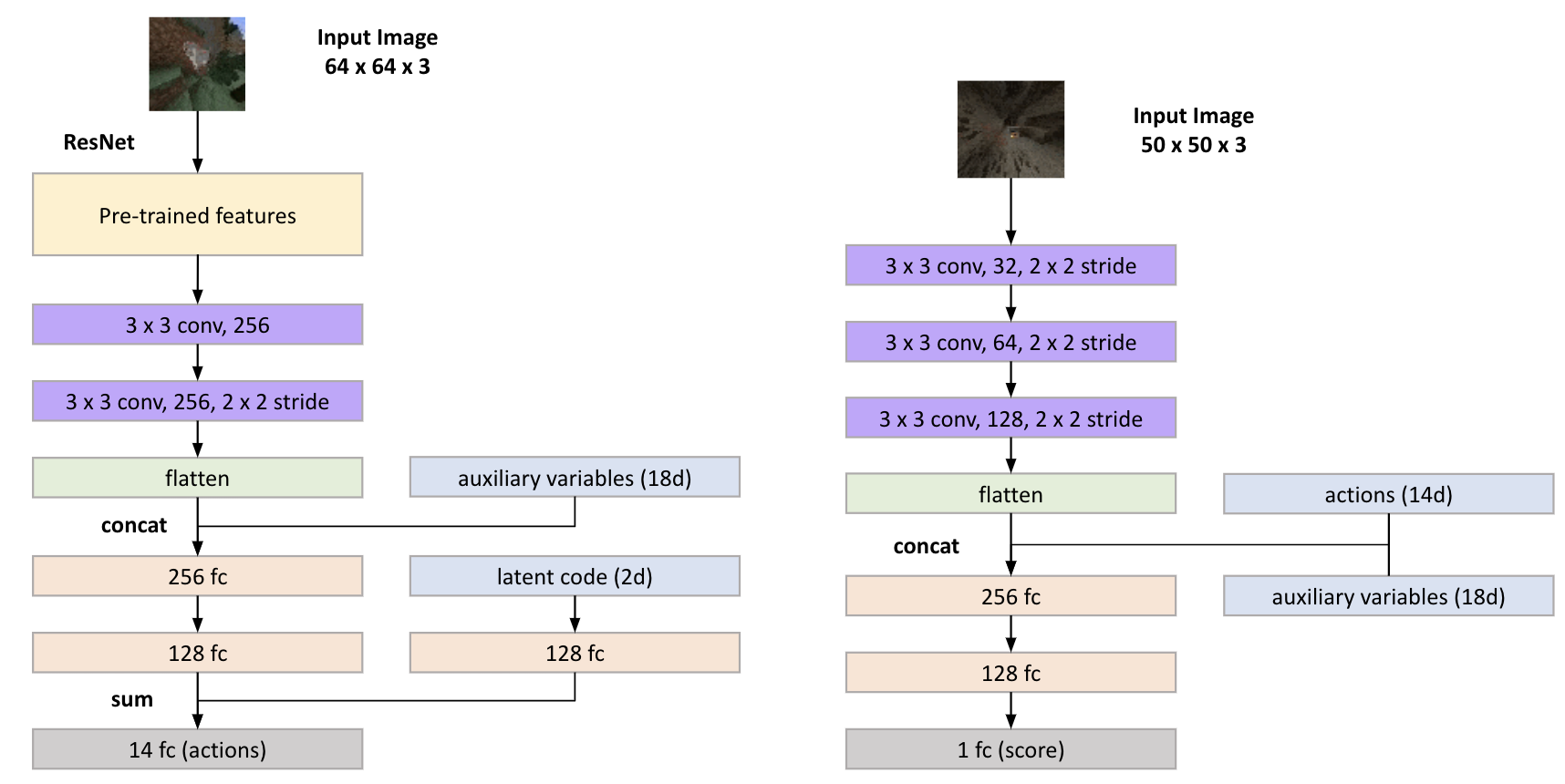

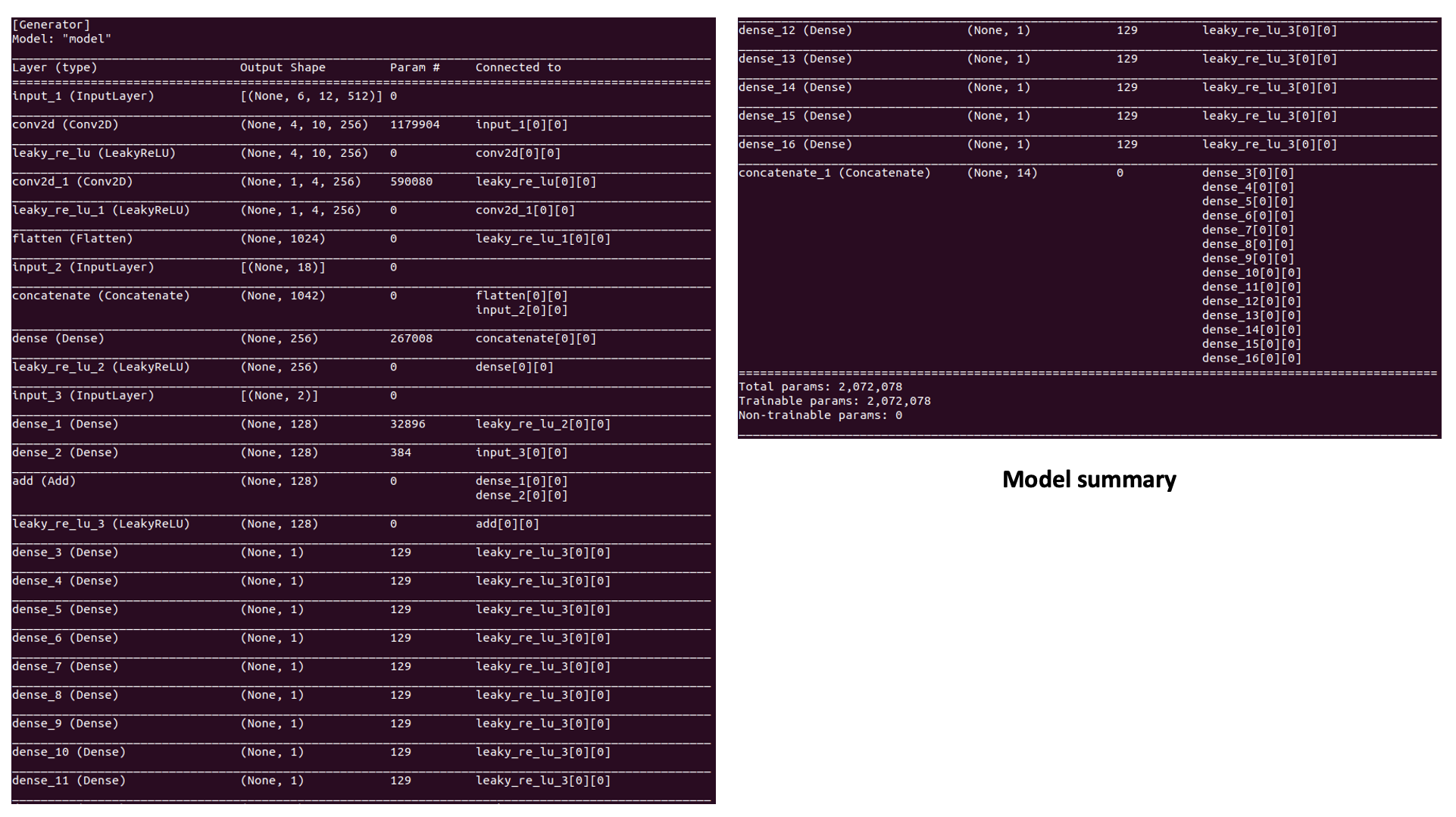

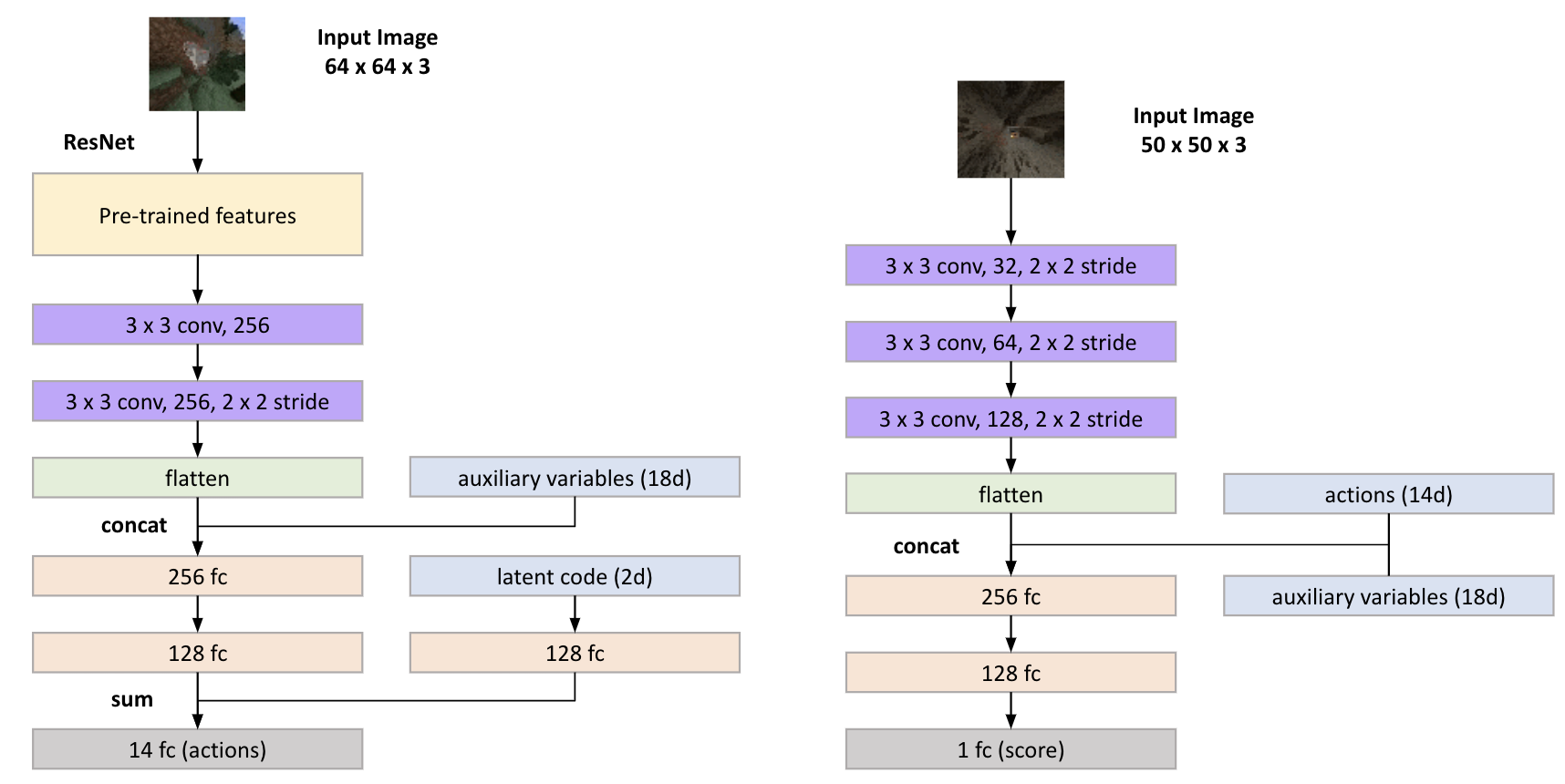

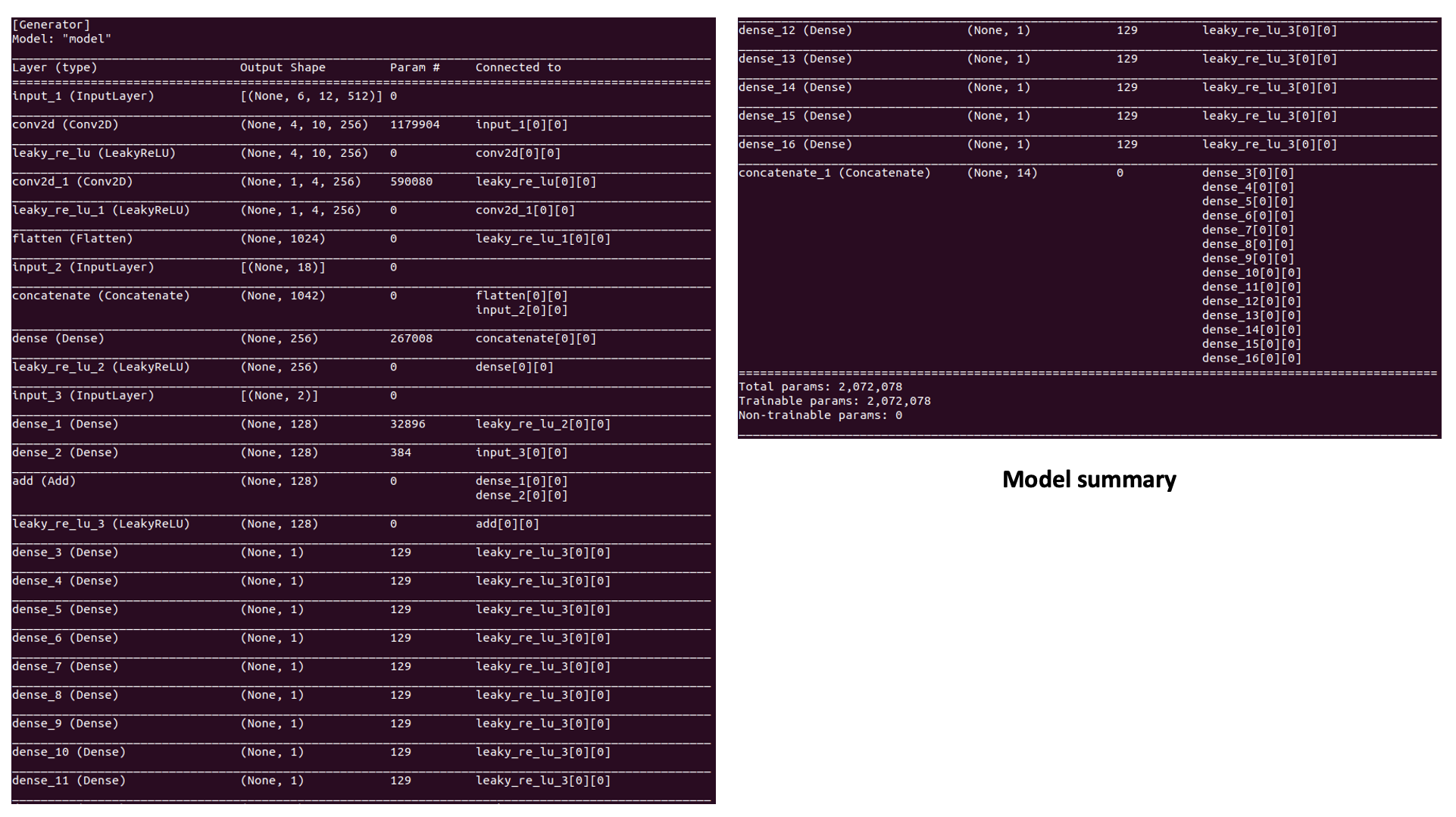

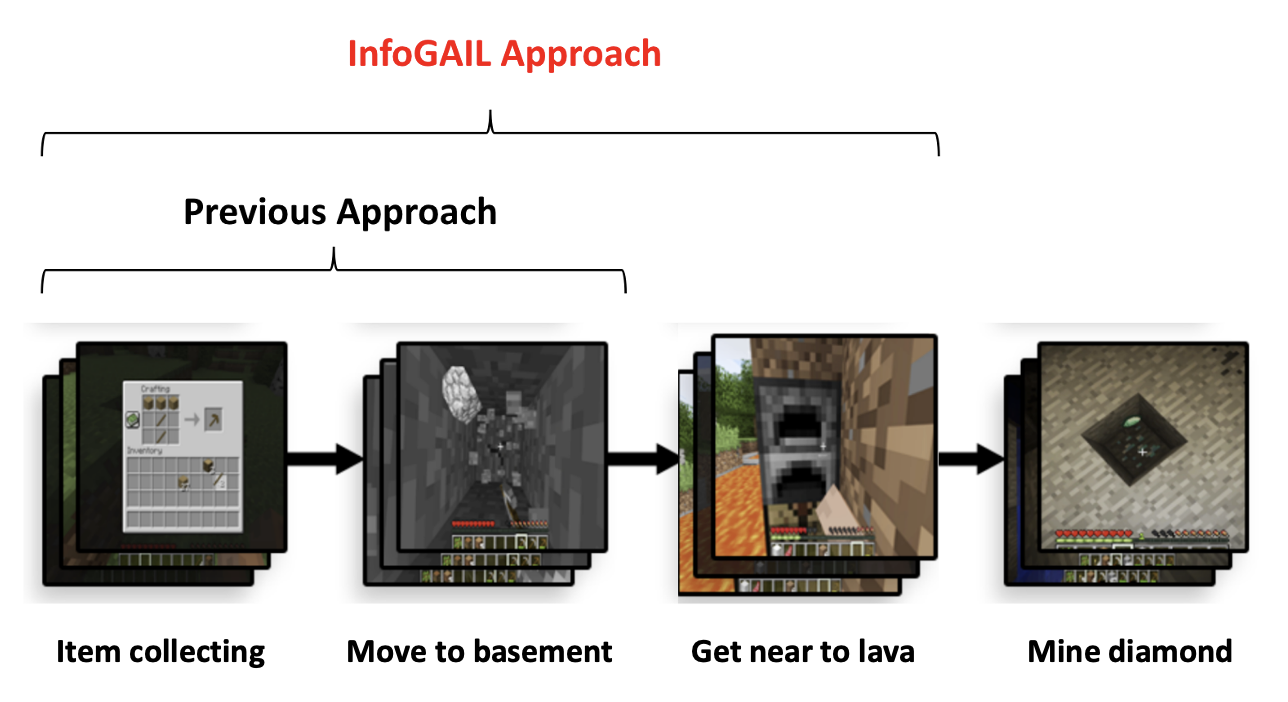

Above figure shows the model summary and four sub-steps to mine diamond. Although we also could not gain diamonds from the environment, we performed the best compared to the baselines previously used such as PPO, rule-based, naive BC. The future work should contain the evaluation process of how efficient the algorithm utilized the expert demonstrations and how can agents benefit from language instructions.

Research Topics

Overview

Environment and Dataset

The task of the competition is solving the MineRLObtainDiamond-v0 environment. In this environment, the agent begins in a random starting location without any items, and is tasked with obtaining a diamond. This task can only be accomplished by navigating the complex item hierarchy of Minecraft.

The agent receives a high reward for obtaining a diamond as well as smaller, auxiliary rewards for obtaining prerequisite items. In addition to the main environment, we provide a number of auxiliary environments. These consist of tasks which are either subtasks of ObtainDiamond or other tasks within Minecraft.

Model Architecture

The goal of imitation learning is to mimic expert behavior without access to an explicit reward signal. Expert demonstrations provided by humans, however, often show significant variability due to latent factors that are typically not explicitly modeled [2]. In this work, we utilized InfoGAIL algorithm in MineRL task to infer the latent structure of expert demonstrations in an unsupervised way.

Experiment and Result

Above figure shows the model summary and four sub-steps to mine diamond. Although we also could not gain diamonds from the environment, we performed the best compared to the baselines previously used such as PPO, rule-based, naive BC. The future work should contain the evaluation process of how efficient the algorithm utilized the expert demonstrations and how can agents benefit from language instructions.

Reference

[1] William H. Guss and Codel, Cayden and Hofmann, Katja and Houghton, Brandon and Kuno, Noboru and Milani, Stephanie and Mohanty, Sharada and Liebana, Diego Perez and Salakhutdinov, Ruslan and Topin, Nicholay and others, "The MineRL Competition on Sample Efficient Reinforcement Learning using Human Priors" at NeurIPS Competition Track.

[2] Yunzhu Li, Jiaming Song, and Stefano Ermon, "Inferring The Latent Structure of Human Decision-Making from Raw Visual Inputs." at NeurIPS.